IP Showcase Theater Schedule

Sunday April 14 to Wednesday April 17, 2024

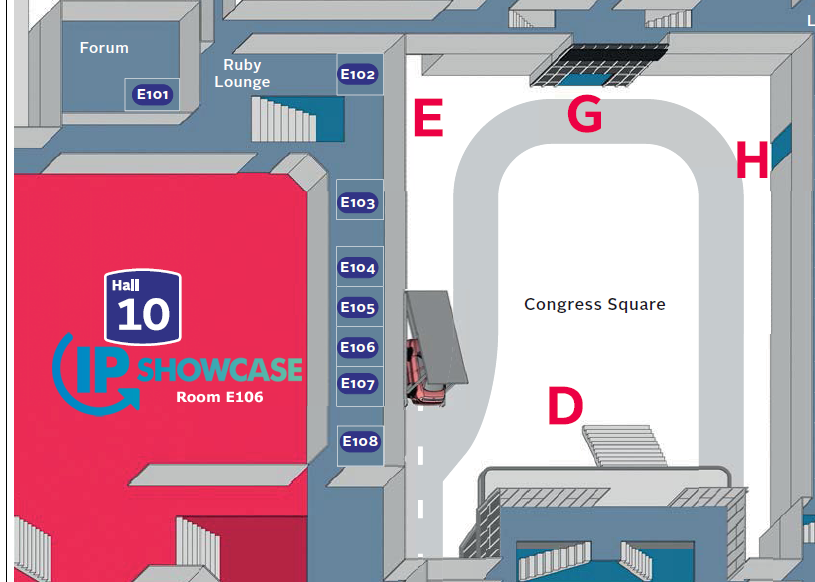

Las Vegas Convention Center, West Hall, booth #W3800

Steve Reynolds, AIMS Chair

Kieran Kunhya - Open Broadcast Systems

Albert Einstein famously said "If you can't explain it to a six-year-old, you don't understand it yourself." but unfortunately a lot of the ST-2110 training out there doesn't abide by this and immediately goes straight into the weeds and into jargon. This presentation won't do that and is designed to be simple enough that after watching you can explain ST-2110 to someone at a dinner party.

Ciro Noronha, Cobalt Digital Inc.

The Reliable Internet Stream Transport (RIST) Activity Group (AG) was formed by the Video Services Forum (VSF) in February 2017 in response to the industry needs of an interoperable protocol for professional grade contribution over the Internet. Since then, the RIST AG published three main Specifications: Simple Profile (TR-06-1), which provides reliable transmission; Main Profile (TR-06-2), which provides tunneling, security, and authentication; and Advanced Profile (TR-06-3), which provides a foundation to run any protocol over RIST. Besides that, the RIST AG has so far published five ancillary Specifications for other functionality, namely Source Adaptation, Wireguard, RIST Relay, Decoder Synchronization, and Multicast Discovery. This talk reviews the work done in the RIST AG since 2017, and gives a preview of what is coming next.

Rosso Koji Oyama, Xcelux Design, Inc.

I have been actively engaged in MoIP (Media over Internet Protocol) facility network design and the development of MoIP network training materials for several years. Recently, there has been a notable increase in the number of MoIP facility designs in Japan, signaling a growing need for the continuous education and development of engineers involved in MoIP infrastructure. Particularly, insights and tips derived from practical designs are expected to be invaluable for future real-world implementations. In light of this, the presenter has leveraged their experience to construct training materials, incorporating practical content, utilizing actual network switches, and focusing on the design, implementation, and verification of both the media plane and control plane networks.

This presentation shows you some of the contents of these training materials and share tips on how to effectively nurture engineers based on past experiences. The journey begins with the fundamentals of Ethernet/IP protocols and progresses through individual themes such as L2/VLAN, PTP, L3/VRF, Unicast Routing/OSPF, Multicast Routing/IGMP/PIM, MoIP design methodology, IP addressing, and Verification/Debugging, and more. Each topic involves hands-on learning using real equipment including network switchers. The final challenge involves designing, implementing, and validating a network from scratch.

There is a vision to make these materials publicly accessible, allowing anyone interested to benefit from them. The objective is to contribute to the broader community of network engineers and enthusiasts by providing a resource that not only imparts theoretical knowledge but also offers practical, real-world application. By starting with the basics and progressively delving into advanced concepts, this training series seeks to empower individuals to confidently tackle the challenges associated with MoIP network design.

The goal is to create engineering resources that fosters a deeper understanding of MoIP networks and facilitates the development of skilled professionals in this rapidly evolving field. The commitment to openness and knowledge sharing underscores my dedication to advancing the collective expertise within the MoIP community, fostering innovation, and preparing engineers for the dynamic landscape of modern network design.

Nicolas Sturmel, NSI2 Consulting.

Following the success of Network 101 sessions addressing IP protocols and quality of service, our focus now turns to routing. Despite prevalent use of VLANs and layer 2 topologies in network setups, understanding layer 3 dynamics is increasingly pivotal.

Transitioning to layer 3 networking entails a shift towards IP-based routing, departing from MAC-based forwarding. This transition introduces crucial concepts like gateways, serving as network entry and exit points, and metrics, determining optimal paths for data transmission.

Exploring routing raises questions about multiple routes to a single destination, prompting a deeper dive into routing algorithms and their role in traffic management.

Furthermore, understanding the behavior of embedded Linux IP stacks illuminates networking protocols and routing table management, essential for network optimization and troubleshooting.

Virtual IP addresses add another layer of complexity, facilitating load balancing, failover, and network segmentation.

Throughout this discussion, we will elucidate these concepts with practical examples, offering clarity and insight into routing fundamentals. By dissecting real-world scenarios, attendees will gain a comprehensive understanding of routing's role in modern network infrastructure. Join us as we navigate the intricacies of layer 3 networking and its implications.

John Mailhot, Imagine Communications

Gareth Wills, Imagine Communications

Some Media Companies are considering moving most/all operations to the cloud, but then wish to keep a footprint on the ground just in case. Others take the opposite approach of looking towards cloud-hosted resources as a resiliency strategy while keeping the primary operations on-prem. In this talk we consider the question from a technology and operational perspective, looking at the different workflow steps in the media supply and delivery chain and the technical factors that enable ground/cloud hybrid operations, developing a vocabulary and set of questions that end users can use in order to evaluate their own proposed operating model.

Andy Rayner, Appear

The adoption of internet connectivity for live production

The increasing use of public internet connectivity for live broadcast production is driven by the significantly lower price point. This in turn creates viability for more live production and increases the production value of broadcasts. The quality of standard internet connectivity provision has also significantly increased, making this a viable approach.

This use also drives the need for more robust connectivity protection mechanisms that have been traditionally used for fibre. ARQ technology has seen a significant adoption in the last couple of years for this live feed connectivity -also necessary to get into public cloud infrastructure.

This presentation will outline use cases and describe the technology used to make this work effectively.

Chris Clarke, Cerberus Tech Ltd

In this presentation, we explore the rationale behind the development of an orchestration and automation platform tailored for our internal use. Our aim was to empower engineers and operators with the tools necessary to streamline and standardize customer deployments, thereby enhancing efficiency and ensuring uniformity across projects.

Through a detailed exploration of the platform's architecture and functionalities, we illustrate how orchestration and automation principles are applied to complex IP workflows. By centralizing control and management processes, we enable seamless coordination of various third party software components involved in live IP video delivery, ensuring smooth operation and optimal performance.

Furthermore, we address the pivotal decision to extend the accessibility of our platform beyond internal usage. Recognizing the growing demand for self-service solutions within the industry, we made the strategic choice to enhance our platform with a user-friendly front end, making it accessible to our customer base. This decision not only facilitates customer autonomy but also empowers them to leverage best-in-class IP workflows tailored to their specific needs.

Throughout the presentation, real-world case studies and practical examples demonstrate the tangible benefits of orchestrating and automating IP workflows. From streamlining deployment processes to enhancing scalability and flexibility, our platform exemplifies the transformative impact of automation on the live IP video landscape.

In conclusion, this presentation offers invaluable insights into the significance of orchestration and automation in optimising IP workflow management. By harnessing the power of automation, organizations can unlock efficiency gains, standardize operations, and deliver superior live IP video experiences to their audiences.

John Mailhot, Imagine Communications

Kieran Kunhya, Open Broadcast Systems

As video processing workflows move into the cloud, there is a real need in the industry for efficient transport of live signals between parts of the workflow. The Video Services Forum Ground-Cloud-Cloud-Ground working group recently published VSF TR-xx -- which includes an API definition that allows applications to catch and throw AV content between workflow steps in an overall production workflow. This recommendation provides tools and methods for managing synchronization among the parts of the signalflow, while also optimizing overall latency. The methods and API are independent of the underlying cloud provider, allowing applications to target multiple hosting scenarios, while the hosting platforms can implement the most efficient transport for this content underneath this common API.

Nir Nitzani, NVIDIA

What we call The new era of time in the data center starts with enabling time service that basically locks all compute nodes in the cloud to a universal time propagated by a grand master clock, a network device that normally connects to a GPS system, to provide Universal Time Clock. The time sync across all the network and compute devices is achieved by running protocol stack that implements precision timing protocol aka PTP

SMPTE has specified in its ST 2059 standards the use of Precision Time Protocol (PTP), as defined in the IEEE 1588 standards for media signal generation and synchronization. Using accurate timing can now solve problems that the industry has been dealing with for decades, in new and innovative ways. Network congestions can be addressed with time based congestion avoidance algorithms and time based steering. Database locking time for serialization assurance, can be significantly reduces to increase the scale and cache coherency can be achieved at much larger scale. All of that translates to CAPEX and OPEX savings, doing more with existing Compute/ network and storage, and increasing the productivity by reducing the workload runtimes

Performance requires not only accuracy but a design that leverages the increase in accuracy (hardware and software).

New emerging use cases are pushing to have PTP in the AI cluster for a few reasons:

Accurate & synced telemetry

For debuggability

For visualization

For profiling the effect of the network on workloads

Synchronized collectives

Improving performance

Reducing OS noise when all machines are synced

Specific workload - Data Bases as Spanner.

Supports 1588/PTP as time source and define the max uncertainty value for the DB

Nik Kero

Over the last few years, the Precision Time Protocol (PTP) has evolved to become the preferred method of choice for accurate time transfer over Ethernet networks for every application domain. PTP being an IEEE standard (IEEE1588) helped but was by no means the only reason for this development. Semiconductor and device manufacturers alike have been adding PTP hardware support to their network products – a mandatory requirement to reach sub-µs accuracies. Most importantly PTP can be tailored to the specific requirements of an application domain via PTP Profiles – a feature many industries made extensive use of. The All-IP Studio, for example, uses the PTP broadcasting profile (SMPTE ST 2059-2) for accurate time transfer.

As a physical transport medium, Ethernet has superseded legacy solutions which were commonly used for many applications in the past. Ethernet is inherently asynchronous with only two adjacent nodes being synchronized with each other. This feature greatly simplifies deployment and maintenance and was possibly the driving factor of its success. When it comes to time and frequency transfer there is an obvious drawback. Accurate time must be transferred via a constant stream of packets, while frequency transfer cannot.

Every end node must regenerate the frequency derived from the time information. This method has proven to be sufficiently accurate for many applications and is widely deployed, yet it has its limits concerning overall accuracy. If the quality of the time information deteriorates, the quality of the re-generated frequency will suffer as well. Specifically optimized digital Phased Locked Loops can mitigate that effect but only to a certain extent. If end devices require accurate as well as highly stable frequencies for their operation, this limitation must be carefully considered.To circumvent this problem the local synchronicity of Ethernet can be extended to provide a common frequency for the complete network. How can this be accomplished?

Whenever two devices establish a communication channel via a physical medium, a transport frequency must be provided by either of the two nodes to which the other must synchronize to. In standard Ethernet, the selection of the respective devices taking over that role is arbitrary. If, however, the selection process is made user definable, a common frequency can be propagated through the complete network.

In this presentation, we will describe SyncE’s basic principles as specified by ITU. We will highlight the prerequisites of network devices to comply with SyncE requirements. Furthermore, we will focus on the software and system aspects of deploying and maintaining a SyncE network. Special consideration will be taken on how to best combine SyncE and PTP to improve both the accuracy and the resiliency of time and frequency transfer. Although SyncE was primarily designed to provide highly accurate time and frequency for modern telecom applications, we will analyze whether and to which extent the broadcasting industry can benefit from this technology. The presentation concludes with real-world measurement in networks with SyncE and PTP support. We will highlight its performance under different operating conditions and demonstrate the impact of different failure modes. We will compare the performance of PTP with SyncE assisted PTP.

Andy Rayner, Appear

IP media connectivity within compute - where the industry is heading

An overview of the differences between 'traditional' linear IP media connectivity and the way connectivity needs to work within a live production software environment.

The industry is on an inevitable move towards entire software live production workflows. This transition necessitates the creation of compute-friendly open interfaces to allow the efficient, low latency virtual connectivity of media data between software modules that form the workflow.

There are a number of different vendor-proprietary and open approaches emerging that offer different capabilities. This presentation will overview the current industry state and explore the emerging open standards that offer the potential for the required features.

If possible, the presentation will include a live demonstration.

Jed Deame, Nextera Video

This presentation will discuss the business and technical details pertaining to the hot new AV over IP system called the Internet Protocol Media Experience (IPMX). The core of the presentation will be a review of the individual components detailed in the TR-10 specification dash numbers and are in an English description of the functionality they bring, including things like asynchronous senders, copy protection, and FEC. We will also discuss the benefits of open standards and look at the technologies behind IPMX. Finally, we will review how the NMOS control system maps to IPMX devices to provide the same plug and play control as is provided in ST 2110, but with some significant ease of use extensions.

Jack Douglass, PacketStorm

Report on the VSF IPMX Activity Group's work on IPMX series of Technical Recommendations the are being published as TR-10, IPMX Dirty Hands Interop, and the IPMX Implementation Testing Event.

Aaron Doughten, Sencore

IPMX is a technology that could benefit the broadcast and professional industries. There is a growing crossover of technology, equipment and operations between these two industries, and with IPMX this crossover continues to grow and make it even easier to bridge the gap for content creation in these markets.

As broadcasters use ST 2110 for high-profile events and look to technologies like NDI for events with smaller budgets, there is an obvious crossover of equipment and operations that IPMX can enable and empower content creators to have the best of both worlds.

Andrew Starks, Macnica

Explore the unique ecosystem of IPMX and its role in ensuring interoperability among media over IP technologies, contrasted with the broader frameworks of ST 2110, AES67, and NMOS. This presentation will clarify how IPMX's structured approach and specific requirements foster seamless integration, while still accommodating extension with both standards and proprietary innovations. Understand the practical implications of IPMX's opinionated yet flexible framework for achieving compatibility in professional AV and broadcast environments.

Sam Recine, Matrox

Pro AV routing and signal management equipment have introduced a mixture of proprietary solutions. None of these are naturally interoperable with each other. We will cover what IPMX is doing to restore the bridge between asset classless previously handled by mini-converters.

Thomas True, NVIDIA

Michael Kohler, Fuse Technical Group

Engineering multi-screen live events is a never-ending challenge; creative clients executing their grandiose vision with never-before-seen technology, live on stage, under budget, on schedule provides the ultimate high-pressure environment for engineers to practice their craft. As high-end LED screens become more affordable, screens on the average production are getting larger, higher resolution, and more plentiful. As the number of pixels being driven increases, so does the complexity of the systems driving them. At the same time, the reality of physical production requires shorter timelines, and of course, sparser budgets.

As an engineer working in live events, the transition to Video over IP, specifically SMPTE 2110, provides a tantalizing glimpse into a future world where our systems have been fundamentally rearchitected to scale better. Utilizing Video over IP replaces a traditional inputs and outputs system model with a simple bandwidth calculation permitting a more flexible utilization of resources up to the limits of the network. In the world of IP Video systems, video no longer needs to be limited to standard resolutions, aspect ratios, or refresh rates. The processing chain, formerly racks and racks of bespoke processing equipment can be rebuilt virtually into software defined applications running on the network, allowing for ultimate flexibility in system design, cost savings by more granularly allocating resources and bandwidth, and allowing us to reap the benefits of modern compute resources such as high-performance real-time rendering systems.

Of course, switching from SDI to ST 2110 alone is not a silver bullet. Modern infrastructure of high-speed network fabrics, data processing accelerators, and legacy conversion needed to build a functional ST 2110 pipeline today is very expensive, and while video engineers are clever, managing IP infrastructure is so fundamentally different from video infrastructure that it will require engineers to learn some new IT skills. Additionally, ST 2110 left out a few key details critical to the live events world. Contrasted to broadcast installations that are planned years in advance, and operate 24/7; live productions tend to be ephemeral, thus control protocols need to be well defined and enforced - Enter IPMX, a more detailed specification that fills in the blanks left by ST 2110's broadcast originators.

Finally, the move to IP enables a new ecosystem of software-based testing tools. In this presentation, we will show off a field-tested backpack-friendly SMPTE 2110 test setup, enabled by NVIDIA RivermaxDisplay, which can output any desktop application over the network to the screen. This helps with troubleshooting and diagnosing systems without cost prohibitive broadcast test gear.

Sergio Ammirata, SipRadius

The need to stream to large numbers of viewers is now common. Practical solutions need to provide low latency, high quality delivery, which is highly secure and simple to use.

The solution lies in the use of RIST (reliable internet stream transport), which today is augmented by IPMX (IP media experience). These are open standards and widely recognised. Simplifying the process of building RIST into practical applications is libRIST, a library of routines. I was involved in the core creation of RIST, and in the libRIST library.

This presentation will take interested parties through the architecture of a streaming service for potentially large numbers of concurrent viewers. A stream – delivered from common apps like ffmpeg, VLC or OBS – is received by a relay, where it is buffered and offered to the potential audience.

The relay provides secure AES encryption and EAP-SRP6a authentication which allows the content owner to set the level of security required, using a simple username and password per connected client.

For scalability, this architecture uses the rist2rist utility. As the name suggests, this utility takes in a RIST stream and distributes it to multiple clients. Each rist2rist can handle up to 100 simultaneous connections and still deliver bullet-proof packet loss protection.

Critically, the rist2rist does not decrypt and re-encrypt the stream, so there is no security risk, and more important it adds virtually no latency.

The structure further scales by calling multiple rist2rist instances in the cloud, creating a virtual CDN as required from moment to moment. We are providing services to a major American broadcaster which supports 1500 or more simultaneous users, receiving very high or ultra high quality. Anywhere in North America end-to-end latency is less than 300ms; intercontinental delivery is still significantly less than one second glass to glass.

The use of the SRP (secure remote password) protocol protects the content end-to-end. This advanced approach to security is authenticated by the client demonstrating to the server that it knows the password without transmitting the password or any data from which the password can be derived, eliminating the possibility of interception.

With a client which is also RIST based, this solution provides a solid DRM protected end-to-end system.

This is a proven architecture providing secure, one to very many, low latency, high quality content distribution.

Kevin Salvidge, Leader PHABRIX

When we transitioned from analogue to SDI, everything changed. We needed to find/make new tools to look at video/audio/captions. Now we face the next transition from SDI to IP and yes, you guessed it, everything is changing again.

With SDI content arrives continuously and allows us to carry the audio, the captions and several other things in the video payload. New toolsets and new ways were created to analysis them. Well, we've done it again, we've moved everything around and put it on IP. With IP content arrives in packets and packets arrive when they arrive, so we now need to find/make new tools to look at network traffic flows and video/audio/captions and make sure the content is compatible with being put back to SDI if needed.

Fortunately, IP is utilizing tried and tested network protocols that have been incorporated into a suite of SMPTE standards.

However, before we explore the new IP test and measurement techniques, let's not forget that the objective of an IP-based facility remains the same as the old SDI one, namely to deliver high-quality programming to your customers. They will still be watching the same pictures, irrespective of the infrastructure that you used to create them. Traditional test and measurement routines like waveform, vectorscope and picture monitoring are still essential. You now need test and measurement products for monitoring and analyzing the color signal elements and the new IP transport layer.

All broadcast systems require stable references. IP-based facilities are no different. Frequency-referenced and phase-referenced black burst and tri-level sync are replaced by time-based PTP (SMPTE 2059), so monitoring the long-term performance of PTP is critical.

With SMPTE 2110, the timing information has been removed from the underlying hardware layer. Distribution is asynchronous. Video images must be captured and displayed frame-accurately so it is essential to ensure that IP timing issues do not cause disruption.

IP timing is quite different from SDI timing. In IP we don't directly measure the physical layer jitter but instead the packet timing. Monitoring long-term timing parameters is vital.

Although you have started your IP migration, legacy SDI-based equipment will still need to be monitored. A True Hybrid test and measurement products can display simultaneous SDI and IP measurements, ensuring your facility performs seamlessly throughout your transition from SDI to IP.

This presentation details the new test and measurement techniques needed to ensure flawless operation.

Adi Rozenberg, AlvaLinks

This presentation will focus on the vital pieces of information that are hidden from any video over IP user today. The presentation will examples of captures and metrics that are key for any Video over IP user.

The presentation will focus on precise metric collection like : Error rate, Jitter, Latency, RTT, Route discovery and HOP changes. Such parameters are critical for applications like RIST, SRT, NDI, ST2110, ATSC3.0 and bonded applications. These KPI's and the precise harvesting are missing from standard IT and observability tools today, as the IT solution are NOT targeted for video applications.

Ievgen Kostiukevych, EBU

The shift to media over IP has been a crucial stepping stone, but it is not the final destination. The ultimate goal is to reshape the media value chain, delivering even on-prem a cloud-like experience characterized by scalable and shareable resources, the disaggregation of Software Media Functions (SMFs), and underlying compute-agnostic architecture. The EBU Members call this new infrastructure architectural paradigm the Dynamic Media Facility (DMF).

The Dynamic Media Facility envisions a world where Software Media Functions (SMFs) replace rigid hardware units. The SMFs are the “right-sized” units of software that carry out common media operations and that are suitable to be deployed using container technology. The concept encourages interoperability, customization, and flexibility, enabling media organizations to adapt to changing demands. The DMF promotes a change in infrastructure management approach from "Pets" to "Cattle." Instead of treating media appliances like "Pets" that require individual care, resources become "Cattle" – interchangeable units that can be easily deployed, managed, and scaled.

Adi Rozenberg, AlvaLinks

In the past the industry tried to use the Media Delivery Index that was one of the early attempts to grade a network link. This presentation will provide guidance what metrics are important to collect and monitor and how to simplify the understanding level with a new proposed scoring method: Delivery Performance Score ( DPS ). The presentation will present formulas and examples for the audience to understand how it performs with various scenarios.

Stephane Cloirec, Harmonic

In today's video cost-conscious world, moving away from satellite and move to IP delivery technology is a key trend for video service providers. Yet, a variety of IP distribution methods exist, each with its own set of advantages and disadvantages. Successfully navigating the complexities of the IP delivery landscape requires a comprehensive understanding of the available methods for transporting video over the internet.

This tutorial will provide attendees with an understanding of the different methods for delivering video over IP, specifically looking at managed IP and open-internet possibilities like SRT or CDN-based / HLS as options. The pros and cons of each method will be discussed. For example, achieving low latency with one IP delivery approach may cost three to four times more than another method. The distinctive benefits of each IP video delivery option will be examined, taking into consideration factors such as low latency, reduced egress costs, monitoring, customization, and more. Ultimately, the insights shared in this tutorial will help attendees make the best choice for IP video distribution based on their specific applications and requirements.

The tutorial will share real-world examples that illustrate why leading media companies chose one IP video delivery method over another.

Tsuyoshi Kitagawa, Telestream

In the rapidly evolving world of broadcast and media production, the transition from Serial Digital Interface (SDI) to Internet Protocol (IP) has been a transformative journey. While IP offers unparalleled flexibility and scalability, it has often been challenging to replicate the familiar SDI user experience and use cases. This IP showcase, titled "Seamless Transition: Emulating SDI Experience with Make-Before-Break IP Switching," aims to demonstrate how advanced make-before-break IP switching technology enables customers to achieve the same user experience and use cases as SDI, while harnessing the benefits of IP infrastructure.

This session will cover:

- Understanding the Shift to IP: The showcase will begin by highlighting the reasons behind the industry's shift from SDI to IP, emphasizing the advantages of IP, such as increased flexibility, scalability, and efficiency.

- Challenges in Replicating SDI Experience: The presentation will delve into the challenges broadcasters and media professionals face when trying to emulate the traditional SDI experience in the IP world, including the need for seamless signal switching and minimal latency.

- Make-Before-Break IP Switching: This tutorial will introduce the concept of make-before-break IP switching, explaining how it works and its significance in mimicking the SDI experience. Attendees will gain insights into the technology's core principles.

- Mimicking SDI Use Cases: The showcase will provide practical demonstrations of how make-before-break IP switching can replicate common SDI use cases, such as live production, studio operations, and remote broadcasts. Attendees will witness the seamless transition between IP sources, closely resembling the SDI experience.

- Advanced Tutorial: This advanced tutorial will cater to engineers, broadcasters, and media professionals, providing in-depth technical insights into configuring and implementing make-before-break IP switching solutions. Attendees will learn about best practices, system requirements, and potential challenges.

- Future-Proofing with IP: The presentation will conclude by highlighting how embracing make-before-break IP switching not only replicates the SDI experience but also future-proofs broadcast and media production workflows by harnessing the full potential of IP technology.

By attending this IP showcase, participants will gain a comprehensive understanding of how make-before-break IP switching technology can bridge the gap between SDI and IP, allowing them to enjoy the familiarity of SDI while benefiting from the versatility and scalability of IP infrastructure. This presentation promises to be an invaluable resource for those looking to navigate the evolving landscape of broadcasting and media production.

Gerard Phillips, Arista Networks

Ryan Morris, Arista Networks

ST2110 Live production was initially present as islands in an SDI sea. Expertise and capability has grown, ST2110 has proven robust and is now common-place. The next logical step is to continue the expansion, out of the studio or playout facility, and to benefit from native IP connectivity end-to-end. Enabling the sharing of media content freely across large organisations, or between organisations enables creativity and lowers costs. As we start to connect these systems with native IP, we begin to build large and complex networks, and we need to consider how these can be built with both security and resilience / reliability in mind. This talk addresses the subject of how this valuable connectivity can be developed without compromising security, or falling foul of large system level failure domains, and how to facilitate the use of overlapping and often private address spaces.

Andreas Hildebrand, Lawo AG

This presentation looks into the principles of flow configuration and packet setup when using networked audio transport. Andreas will explains the different options in RAVENNA, AES67, SMPTE ST 2110 and other popular AoIP solutions and provides advice on how to best transport MADI signals over AoIP. At the end, you'll know how use flow configuration to optimize your transport latenies.

Jean-Baptiste Lorent, intoPIX

Several recommendations exist to empower live production workflows with the new JPEG XS ISO standard issued from VSF, AMWA, MPEG, and the IETF. Join this session to learn how to integrate and use JPEG XS in IP-based workflows such as SMPTE 2110, WAN, IPMX, or Cloud. Learn some of the key benefits of the standard in live production workflows to bring more efficiency, and reduce bandwidth and cost.

Visit the IP Showcase booth W3800 for an open discussion on AES67. No registration required.

David Edwards, Net Insight

This paper explores the multifaceted capabilities of JPEG XS and its integration with the TR-07 protocol. It will look beyond its virtually-lossless compression and explore additional aspects that increase its suitability for delivering high-quality video carriage over IP networks that are contributing to the rapid adoption of these technologies.

Focusing on the toolkit provided by TR-07, which facilitates seamless networking across diverse physical interconnects, by offering easy termination options such as SMPTE 2110, SMPTE 2022-6, or SDI, the TR-07 protocol effectively bridges the common broadcast topologies.

JPEG XS scalability is emerging as pivotal for enabling integration across entire broadcast workflows. Its adaptability to be implemented throughout the range from classical hardware, COTS software processing engines, and Cloud instantiations allows the technology to span from single-service network edge deployments to channel dense production centers up to the consumer delivery border.

The paper not only outlines the compression properties of JPEG-XS but also emphasizes its low-complexity implementation. This, combined with flexible IP packaging, is simplifying network carriage and handoff across broadcast workflows. The integration of JPEG-XS and TR-07 is explored in detail, shedding light on how this powerful combination is reshaping the landscape of broadcast technology, from camera source to program presentation.

Adi Rozenberg, AlvaLinks

This presentation will review how the RIST Technical recommendations work to preserve multicast workflows with native support. Adding new capabilities like encryption and authentication. It will also describe the recently released new TR 'multicast discovery' and why it is important for multicast based applications.

Timothy Stevens, Verizon

Partnering with AWS, Zixi and Vizrt, Verizon and the NHL have developed several new advanced workflows, taking advantage of 5G connectivity and MEC (Mobile Edge Computing) to deliver live camera to cloud video streams for REMI production. Public 5G MEC will utilize both Zixi and our AWS partners to deliver multiple streams from live events to access cloud-based video workflows for any of a number of production requirements.

Once in the cloud environment, video stream will take advantage of Vizrt’s software stack for Live Replay clipping, Graphics creations, composition and Video mixing to create a low latency, video program for distribution to Partners, MVPDs or Direct to Consumer. The flexibility of these applications, coupled with low latency edge compute, will usher in a new era of remote and virtualized production capabilities, especially designed to address the growing need of sports production. Demonstrations of the technology stack are available to experience in the Verizon booth at NAB.

Stefan Ledergerber, Simplexity

Building a contemporary All-IP 2110 facility today involves a vast number of considerations, far more than previous projects based on traditional SDI technology. Besides new topics like network topology and subnet design, PTP architecture, multicast address management, and stream format definitions, there are even more difficult questions to answer, such as ensuring security, establishing robust monitoring and probably the most challenging of all, mastering the complexity of the setup by a suitable control system and user interface. Only then can we truly unlock new workflows and realize increased efficiency.

Addressing these challenges requires a significant amount of expertise and effort from the customer side. Today, since not many organizations have completed all-IP projects yet, there's a lack of readily available guidance. This makes it crucial to learn from others' experiences to avoid reinventing the wheel and avoid repeating mistakes.

In this situation NMOS can reduce the complexity of the questions by offering some mechanisms which have been thought through already. It can be a common denominator between all kinds of products and vendors. While commonly known parts like NMOS IS-04/05 help with discovering and connecting devices and their streams, NMOS today actually addresses a wider range of issues inherent in setting up and operating an IP facility, e.g.

- Monitoring of connection status

- Dynamic setup of sender formats and allowing them to align with given receiver capabilities

- Label exchange between devices

- A generic way to realize control of any parameter within a device, including active notifications about changes thereof

This presentation will delve into how NMOS can streamline real-world projects and increase efficiency. Following the same rules means making integration easier, finding technical issues faster and allow projects to focus more on important topics like workflow and operation. We'll explore how NMOS specifications can make IP-based facilities work better for everyone involved, optimizing performance and ease of management. Join us as we illustrate the transformative power of NMOS by bridging the gap between equipment and control systems.

Visit the IP Showcase booth W3800 for an open discussion on NMOS. No registration required.

Thomas Gunkel, Skyline Communications

This presentation discusses how media organizations can optimize operational efficiency in a rapidly evolving media landscape to ensure future success. While they need to embrace many general IT concepts for this, at the same time traditional IT tools often fall short because of their particular needs.

The needs of media teams involve real-time operations, 24/7 availability, management of high-bitrate video flows, and accurate timing, while common ICT topics are security, data-driven management, and continuous evolution using DevOps principles. To reduce cost, improve efficiency, and innovate workflows and business models over time, both worlds need to be harmonized. MediaOps architecture is the underlying foundation to achieve this.

With a showcase developed together with several tier-I customers in the media industry, the presentation demonstrates how the life cycle of events, work orders, and productions can be managed and automated by applying DevOps principles, including automated inventory onboarding and reservations of offline (people, rooms, etc.) and online (on-prem equipment and cloud-based functions) resources. Cloud-based resources first need to be automatically deployed before they can be configured. Connectivity across technical domains (RF, SDI, IP, etc.) is essential, as are user-specific real-time control and monitoring interfaces. Once events are finished, resources are also automatically undeployed, and resource utilization is tracked for billing purposes.

The presentation concludes with guidelines for media companies to gradually reach their goals and evolve based on a solid MediaOps architecture.

Jonathan Thorpe, Sony Europe

Sony aims to keep their nmos-cpp open source implementation of the AMWA NMOS specifications up to date. Towards this goal, we have recently added support for the MS-05/IS-12 Device Control and Configuration specifications, as well as support for the BCP-003-02/IS-10 Authorization specifications.

This presentation will show how using the nmos-cpp open source software allows easy adoption of NMOS specifications. This will be illustrated by modelling an NMOS Device using MS-05. It will also show how BCP-003-02/IS-10 Authorization can be easily added to existing nmos-cpp developments.

Chris Lennon, Ross Video

Control of media devices and services across platforms (on-prem, public cloud, private cloud, etc.) continues to be the Wild West. As we move more toward IP and hybrid workflows, this often comes up as one of the top challenges yet to be met by the industry.

Media companies, vendors, and platform providers alike are teaming up to tackle this head on in the most open way possible, with an open standards and open source solution, called Catena. SMPTE is leading the way with this initiative of their Rapid Industry Solutions Open Services Alliance group (RIS-OSA). This group is focused on tackling real-world, highly impactful issues in a rapid manner, getting solutions into the hands of the industry before it's too late.

Catena is a security-first technology, using previously industry-accepted approaches wherever possible, rather than re-inventing solutions.

We will give you a quick overview of what Catena is all about, what has already been done, what's left to tackle, and how to get involved.

Alun Fryer, Ross Video

NMOS is a well-designed set of standards to address the needs of control and management of ST 2110 systems. Can we apply any of that good work to non-ST 2110 environments? This presentation outlines the current activity underway in AMWA to bring control of NDI-based media and devices into the NMOS ecosystem. This part of an ongoing effort to expand the scope of NMOS beyond ST 2110 systems and enable control in environments employing a variety of Media over IP technologies.

NDI provides a simple, low-cost solution for networked media, with a large number of deployments in professional AV and growing footprint into broadcast environments. However, NDI has lacked a standardized approach to centralized control, which is a requirement in most broadcast facilities. Many professional and broadcast users are seeing the benefits of NDI, both stand-alone, and in conjunction with other Media over IP technologies like ST 2110.

AMWA is developing a new specification, BCP-007-01, defining how NMOS will work with NDI media and devices. In this presentation we’ll overview the current activity to draft BCP-007-01 and explore how NMOS can bridge control across NDI deployments and integrate with ST 2110 systems under a single, unified control system.

Discuss new developments and speak with IPMX technology experts at the IP Showcase. No registration required.

Yoann Hinard, Witbe

The ongoing transition in the broadcast industry from QAM to IP streaming represents a seismic shift, not just in technology but in the entire operational paradigm. This presentation will dissect challenges and strategies for quality control (QC) and monitoring in this rapidly evolving environment where platforms like Android TV and RDK are prevalent.

Traditional, controlled broadcast environments are being replaced by dynamic, complex ecosystems where multiple applications and services must harmoniously coexist. The challenge is to maintain the quality of a provider's main service, like linear TV with dynamically inserted ads, while accommodating the diverse requirements for and potential interference from third-party applications. We will share real-world examples where the interaction between these applications has directly impacted service quality.

This transition is complicated by user expectations in a world where third-party applications like Disney+, Netflix, Peacock, and Max are integral to the viewing experience. Consumers expect seamless functionality for these applications, regardless of them being provided by third parties. This places a unique burden on service providers to ensure consistent quality across all content, whether first-party or third-party.

With the QAM to IP transition, the QC process has been fundamentally changed. The most common platforms involved are Android TV and Comcast RDK, both of which are updated frequently and with minimal control from the service provider. In addition, more devices need to be tested than ever before including consumer-grade devices like Fire TV and Apple TV, which all receive their own frequent updates that require new tests.

Content discoverability becomes key in this extremely competitive environment. It is crucial to successfully implement deep indexing, which allows the viewer's voice control or universal search requests to be directed to the provider and not a default Android TV handler, typically YouTube or the Google Play Store.

Another new challenge with this transition is Dynamic Ad Insertion. Ads are typically handled through the video player with IP streaming, which can result in a variety of video errors, including frozen screens, blank slates, and device crashes. New types of monitoring are required to understand and resolve these errors.

Further complicating the transition is the fact that most operations and QC teams are remote. In order to work collaboratively, teams need to reliably and securely access the real devices they test in their intended markets from anywhere in the world. This presentation will discuss useful techniques for remote monitoring, as well as other important considerations.

Thomas Kramer, MainConcept

The debate between software and hardware video encoding has been going on for years. The choice for some simply comes down to whether running on the GPU or CPU, but that can be too simplistic for many use cases. Software encoders typically achieve better quality when running on the GPU, while CPU encoders offer a wider feature set, especially with production formats like 10-bit 4:2:2. For use cases targeting high processing performance instead of quality, GPU encoders are the preferred choice.

The major graphics board providers, AMD, NVIDIA and Intel, develop encoders for their GPUs, offering AVC, HEVC or AV1 hardware options. Although convenient for users operating within a single platform, it has an undesirable effect for multi-platform users: each SDK has a different API. To support each platform, engineers need to integrate Intel Quick Sync Video, NVIDIA NVCENC and AMD AMF (Advanced Media Framework) SDKs separately. And this is only on the encoder side, the same applies for decoding/transcoding. This requires three times the integration work. This is not only time-consuming but also cost-intensive.

How can developers save time and money integrating software and hardware encoders and decoders from the major vendors? The solution is combining the GPU-based libraries from Intel, NVIDIA and AMD, including software encoders, into a single API. This gives developers the ability to use video codecs providing software processing features as well as hardware encoding or decoding using Intel Quick Sync Video, NVIDIA NVENC/NVDEC and AMD AMF SDK. The primary benefit of a single API is that customers only need a single integration cycle. Plus, there are fewer contact points should any support issues arise. All of this means much shorter development cycles.

In this session, MainConcept will share: The impetus for moving to a single API; How this approach benefits users, software vendors and the GPU manufacturers; Real life examples of where the API has solved complex customer problems across their multi-codec, multi-platform workflows; How providing a single API combining battle-tested software encoders with the most used hardware encoders helps companies bring their solutions to the market in a fraction of the time; and Why a single API helps future-proof a workflow, whether it be on-premises or in the cloud.

Joe Bleasdale, Megapixel VR

Megapixel will discuss how ST 2110 and IPMX have significantly improved large scale LED projects vs traditional baseband technology, not just in Broadcast but in other key verticals too.

-

No Presentations10:00 - 10:45

-

AIMS Overview, with Steve ReynoldsSteve Reynolds, AIMS Chair10:45 - 11:00

-

How to Explain ST 2110 to a Six-Year-OldKieran Kunhya, Open Broadcast Systems11:00 - 11:30

-

RIST: Past, Present and FutureCiro Noronha, Cobalt Digital Inc.11:30 - 12:00

-

Mastering the Basics of Media-over-IP Network Design -- Road to Public Access of Practical Training MaterialsRosso Koji Oyama, Xcelux Design, Inc.12:00 - 12:30

-

Network 101: RoutingNicolas Sturmel, NSI2 Consulting12:30 - 13:00

-

What is The Cloud Good for, Anyway?John Mailhot, Imagine Communications13:00 - 13:30

-

The Adoption of Internet Connectivity for Live ProductionAndy Rayner, Appear13:30 - 14:00

-

Orchestration and Automation for cloud hosted IP workflowsChris Clarke, Cerberus Tech Ltd14:00 - 14:30

-

VSF GCCG (TR-11): A Common API for Video Transport Within the CloudJohn Mailhot, Imagine Communications and Kieran Kunhya, Open Broadcast Systems14:30 - 15:00

-

IEEE 1588 Standard Timing for Cloud AINir Nitzani, NVIDIA15:00 - 15:30

-

Is Synchronous Ethernet a Must Have or just a Gimmick for the Broadcasting Industry?Nik Kero15:30 - 16:00

-

IP Media Connectivity Within Compute - Where the Industry is HeadingAndy Rayner, Appear16:00 - 16:30

-

No Presentations10:00 - 10:30

-

What is IPMX? Plain Language Summary of the IPMX Technical RecommendationsJed Deame, Nextera Video10:30 - 11:00

-

IPMX Activity Group and IPMX Implementation Testing Event ReportJack Douglass, PacketStorm11:00 - 11:30

-

IPMX in the World of BroadcastAaron Doughten, Sencore11:30 - 12:00

-

Integrating Standards for Media Over IP: The Role of IPMX, ST 2110, and AES67Andrew Starks, Macnica12:00 - 12:30

-

Connecting Professional Media Equipment, AV Signal Management, and PC/IT – an IPMX DiscussionSam Recine, Matrox12:30 - 13:00

-

Media Over IP for Live Events: From Screaming to Streaming!Thomas True, NVIDIA and Michael Kohler, Fuse Technical Group13:00 - 13:30

-

Using IPMX and RIST for high performance secure streaming of live content to large numbers of usersSergio Ammirata, SipRadius13:30 - 14:00

-

"Pipes are Now Packets“ Part II: What you really need to know about QC, Monitoring & Maintenance for IPKevin Salvidge, Leader PHABRIX14:00 - 14:30

-

Active and Precise Network Delivery MonitoringAdi Rozenberg, AlvaLinks14:30 - 15:00

-

Path to Dynamic Media FacilitiesIevgen Kostiukevych, EBU15:00 - 15:30

-

Delivery Performance Score ( DPS ) - Grading IN Network for Video Over IP ApplicationsAdi Rozenberg, AlvaLinks15:30 - 16:00

-

Navigating the IP Video Delivery Maze: Understanding the Diverse Paths for Distributing Video Over IPStephane Cloirec, Harmonic16:00 - 16:30

-

No Presentations10:00 - 10:30

-

Seamless Transition: Emulating SDI Experience with Make-Before-Break IP SwitchingTsuyoshi Kitagawa, Telestream10:30 - 11:00

-

Expanding ST2110 Beyond the Studio - Connecting it all TogetherGerard Phillips and Ryan Morris, Arista Networks11:00 - 11:30

-

MADI over IP: The Magic About Flow Configuration and Packet SetupAndreas Hildebrand, Lawo AG11:30 - 12:00

-

JPEG XS in Action for IP Workflows : Standards for Interoperability, Quality and EfficiencyJean-Baptiste Lorent, intoPIX12:00 - 12:30

-

AES67 Meetup Session12:30 - 13:00

-

JPEG XS and TR-07: The Power Lies Beyond the CODECDavid Edwards, Net Insight12:30 - 13:00

-

Multicast Application in RISTAdi Rozenberg, AlvaLinks13:00 - 13:30

-

Verizon NHL 5G Remi ProductionTimothy Stevens, Verizon13:30 - 14:00

-

NMOS: What's in it for Me?Stefan Ledergerber, Simplexity14:00 - 14:30

-

NMOS Meetup Session14:30 - 15:00

-

The Dynamic Media Factory – Harmonizing ICT and Media Workflows and OperationsThomas Gunkel, Skyline Communications14:30 - 15:00

-

What's New in NMOS-CPP?Jonathan Thorpe, Sony Europe15:00 - 15:30

-

Taking an Open Source Approach to the Control PlaneChris Lennon, Ross Video15:30 - 16:00

-

NMOS and NDIAlun Fryer, Ross Video16:00 - 16:30

-

IPMX Meetup Session10:00 - 10:30

-

Transitioning from QAM to IP Video Delivery: Best Practices, Challenges, and Considerations for ProvidersYoann Hinard, Witbe10:30 - 11:00

-

Streamlining Software and Hardware Video Encoding: The Power of a Single API for DevelopersThomas Kramer, MainConcept11:00 - 11:30

-

The Benefits of IP Infrastructure for Large-Scale LED DeploymentsJoe Bleasdale, Megapixel VR11:30 - 12:00

-

No Presentations12:00 - 16:30